- Introduction

- Ordered categorical data

- Ordered categorical data with regression variables

- Discrete-time Markov chain

- Continuous-time Markov chain

Objectives: learn how to implement a model for categorical data, assuming either independence or a Markovian dependence between observations.

Projects: categorical1_project, categorical2_project, markov0_project, markov1a_project, markov1b_project, markov1c_project, markov2_project, markov3a_project, markov3b_project

Introduction

Assume now that the observed data takes its values in a fixed and finite set of nominal categories . Considering the observations

for any individual

as a sequence of conditionally independent random variables, the model is completely defined by the probability mass functions

for

and

. For a given

, the sum of the

probabilities is 1, so in fact only

of them need to be defined. In the most general way possible, any model can be considered so long as it defines a probability distribution, i.e., for each

,

, and

. Ordinal data further assume that the categories are ordered, i.e., there exists an order

such that

We can think, for instance, of levels of pain (low moderate

severe) or scores on a discrete scale, e.g., from 1 to 10. Instead of defining the probabilities of each category, it may be convenient to define the cumulative probabilities

for

, or in the other direction:

for

. Any model is possible as long as it defines a probability distribution, i.e., it satisfies

It is possible to introduce dependence between observations from the same individual by assuming that forms a Markov chain. For instance, a Markov chain with memory 1 assumes that all that is required from the past to determine the distribution of

is the value of the previous observation

., i.e., for all

,

Ordered categorical data

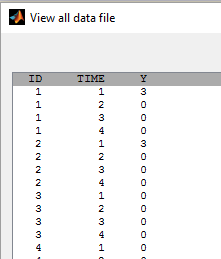

- categorical1_project (data = ‘categorical1_data.txt’, model = ‘categorical1_model.txt’)

In this example, observations are ordinal data that take their values in {0, 1, 2, 3}:

- Cumulative odds ratio are used in this example to define the model

where

This model is implemented in categorical1_model.txt:

[LONGITUDINAL]

input = {th1, th2, th3}

EQUATION:

lgp0 = th1

lgp1 = lgp0 + th2

lgp2 = lgp1 + th3

DEFINITION:

level = { type = categorical, categories = {0, 1, 2, 3},

logit(P(level<=0)) = th1

logit(P(level<=1)) = th1 + th2

logit(P(level<=2)) = th1 + th2 + th3

}

A normal distribution is used for , while log-normal distributions for

and

ensure that these parameters are positive (even without variability)

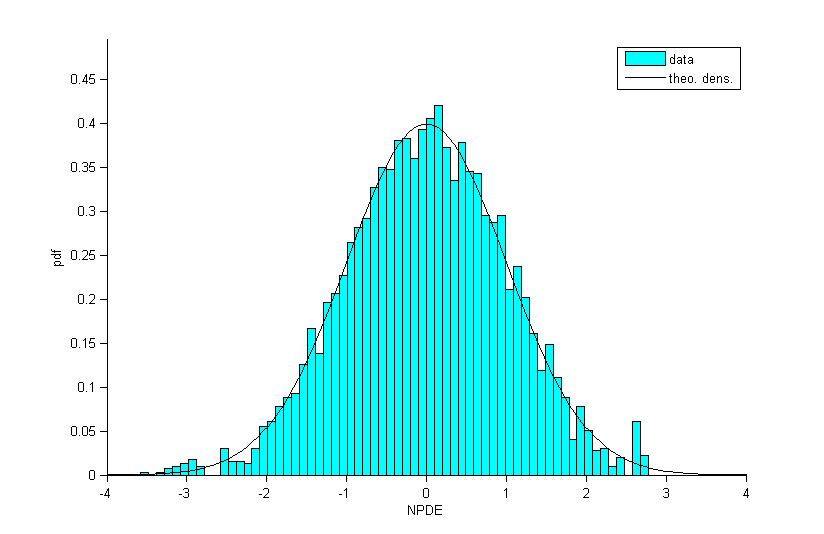

Residuals for noncontinuous data reduce to NPDE’s. We can compare the empirical distribution of the NPDE’s with the distribution of a standardized normal distribution:

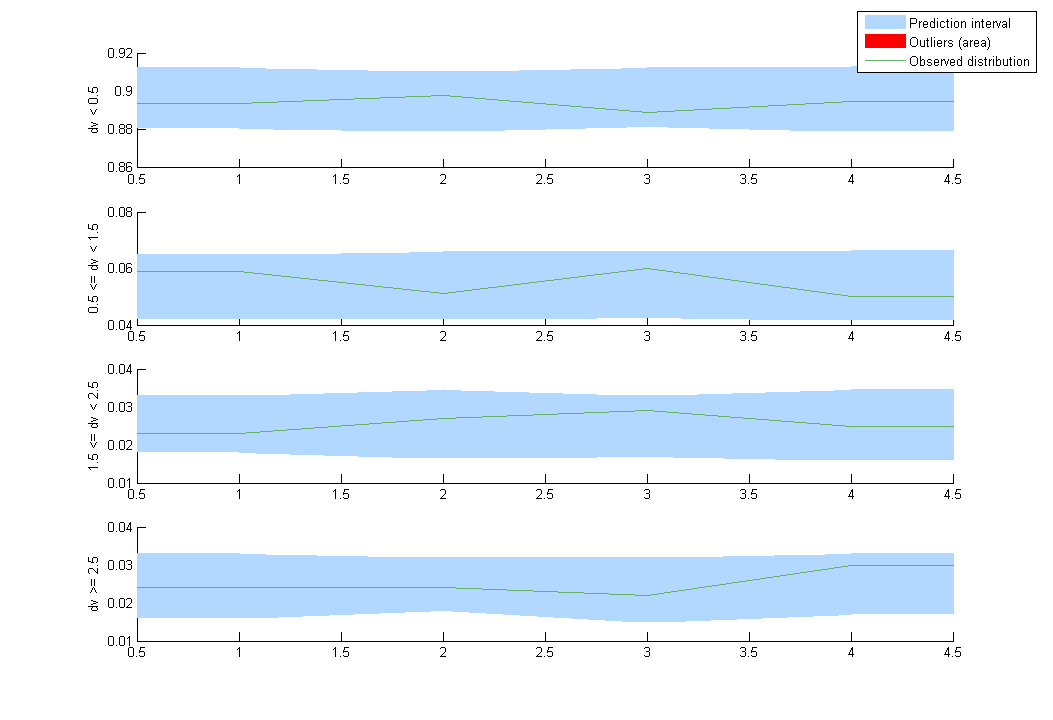

VPC’s for categorical data compare the observed and predicted frequencies of each category over time:

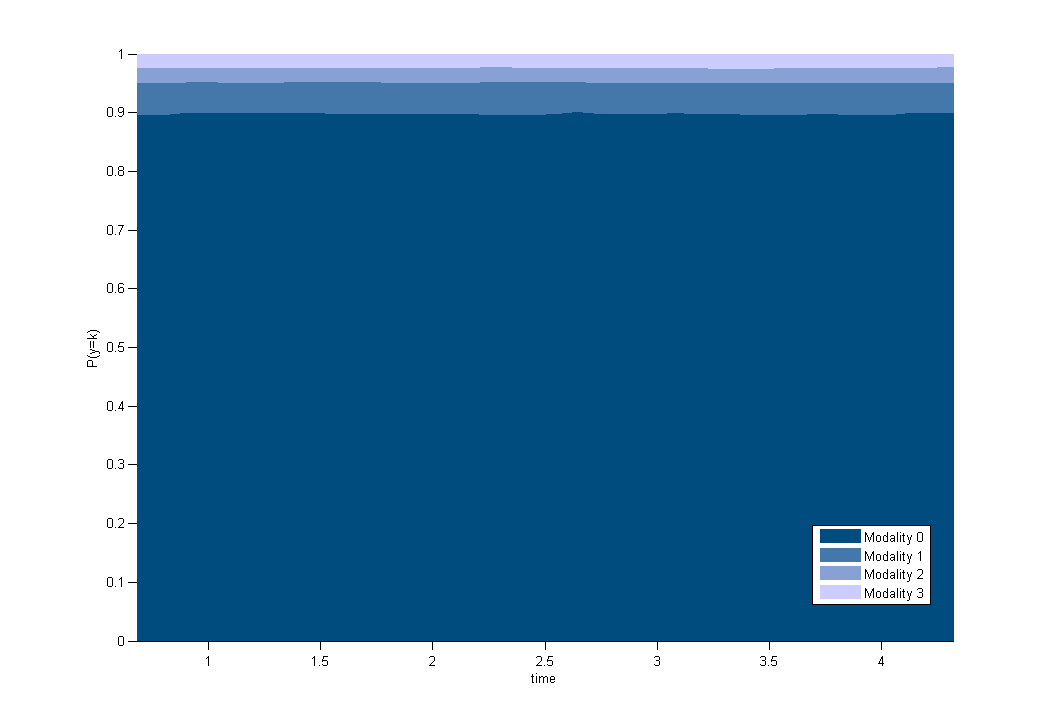

The prediction distribution can also be computed by Monte-Carlo:

Ordered categorical data with regression variables

- categorical2_project (data = ‘categorical2_data.txt’, model = ‘categorical2_model.txt’)

A proportional odds model is used in this example, where PERIOD and DOSE are used as regression variables (i.e. time-varying covariates)

Discrete-time Markov chain

If observation times are regularly spaced (constant length of time between successive observations), we can consider the observations to be a discrete-time Markov chain.

- markov0_project (data = ‘markov1a_data.txt’, model = ‘markov0_model.txt’)

In this project, states are assumed to be independent and identically distributed:

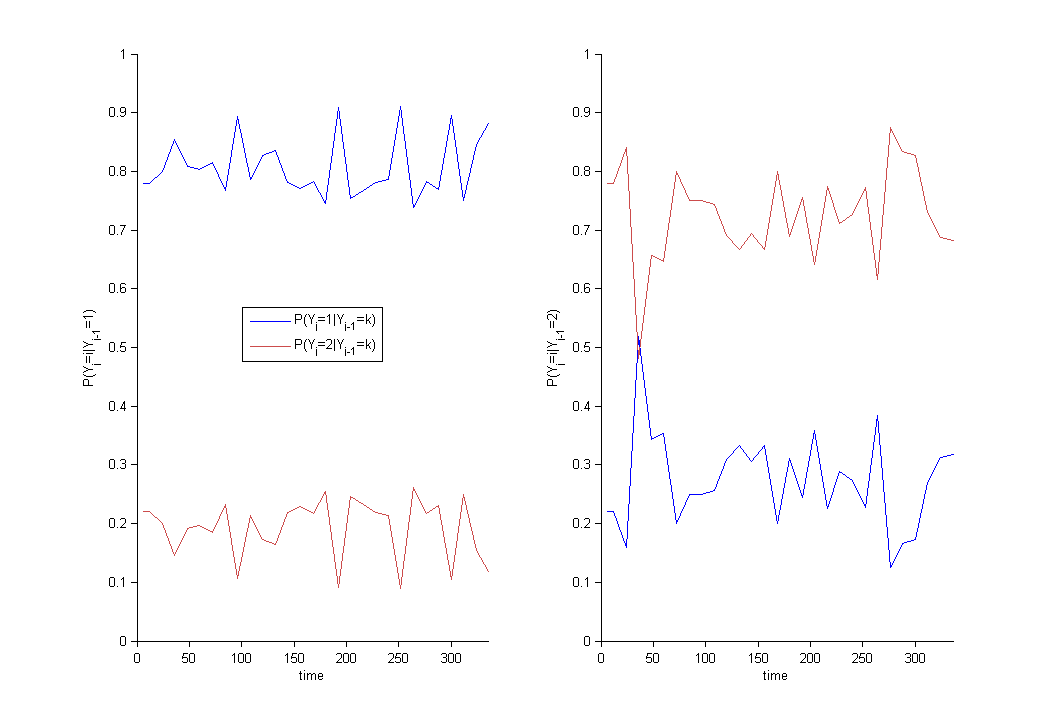

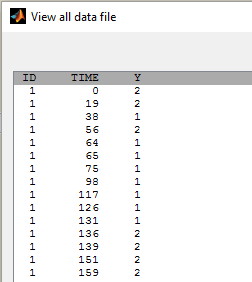

Observations in markov1a_data.txt take their values in {1, 2}. Before fitting any model to this data, it is possible to display the empirical transitions between states by selecting Transition probabilities in the list of selected plots.

We can see that the probability to be in state 1 or 2 clearly depends on the previous state. The proposed model should therefore be discarted.

- markov1a_project (data = ‘markov1a_data.txt’, model = ‘markov1a_model.txt’)

Here,

[LONGITUDINAL]

input = {p11, p21}

DEFINITION:

State = {type = categorical, categories = {1,2}, dependence = Markov

P(State=1|State_p=1) = p11

P(State=1|State_p=2) = p21

}

The distribution of the initial state is not defined in the model, which means that, by default,

- markov1b_project (data = ‘markov1b_data.txt’, model = ‘markov1b_model.txt’)

The distribution of the initial state, , is estimated in this example

DEFINITION:

State = {type = categorical, categories = {1,2}, dependence = Markov

P(State_1=1)= p

P(State=1|State_p=1) = p11

P(State=1|State_p=2) = p21

}

- markov3a_project (data = ‘markov3a_data.txt’, model = ‘markov3a_model.txt’)

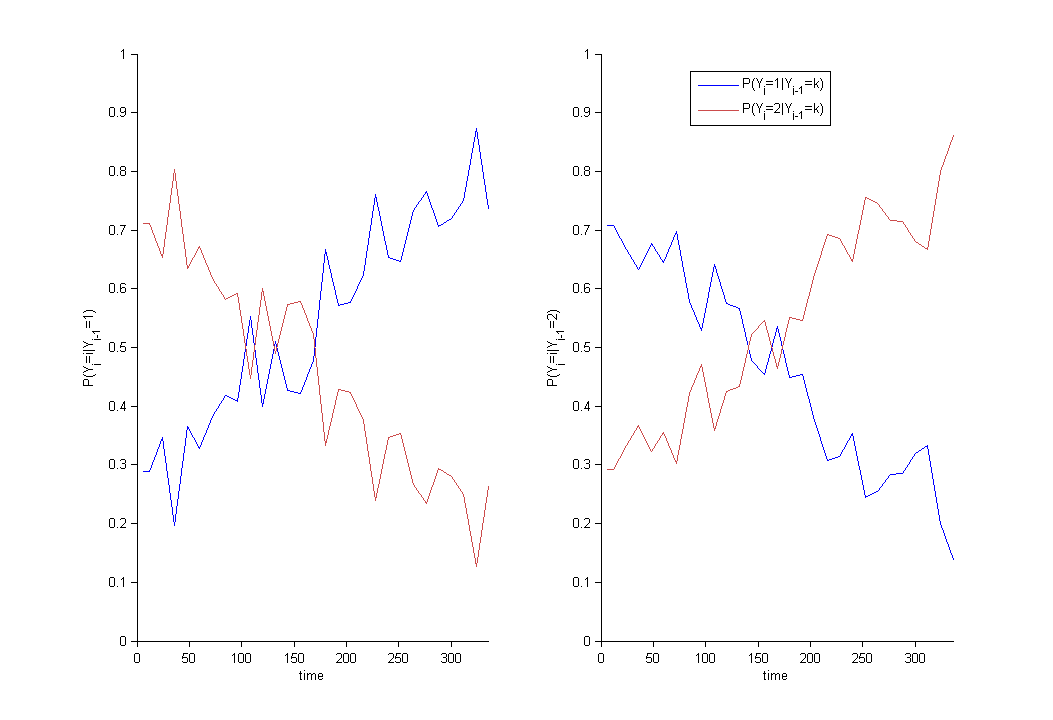

Transition probabilities change with time in this example:

We then define time varying transition probabilities in the model:

[LONGITUDINAL]

input = {a1, b1, a2, b2}

EQUATION:

lp11 = a1 + b1*t/100

lp21 = a2 + b2*t/100

DEFINITION:

State = { type = categorical, categories = {1,2}, dependence = Markov

logit(P(State=1|State_p=1)) = lp11

logit(P(State=1|State_p=2)) = lp21

}

- markov2_project (data = ‘markov2_data.txt’, model = ‘markov2_model.txt’)

Observations in markov2_data.txt take their values in {1, 2, 3}. Then, 6 transition probabilities need to be defined in the model.

Continuous-time Markov chain

The previous situation can be extended to the case where time intervals between observations are irregular by modeling the sequence of states as a continuous-time Markov process. The difference is that rather than transitioning to a new (possibly the same) state at each time step, the system remains in the current state for some random amount of time before transitioning. This process is now characterized by transition rates instead of transition probabilities:

The probability that no transition happens between and

is

Furthermore, for any individual and time

, the transition rates

satisfy for any

,

Constructing a model therefore means defining parametric functions of time that satisfy this condition.

- markov1c_project (data = ‘markov1c_data.txt’, model = ‘markov1c_model.txt’)

Observation times are irregular in this example:

Then, a continuous time Markov chain should be used in order to take into account the Markovian dependence of the data:

DEFINITION:

State = { type = categorical, categories = {1,2}, dependence = Markov

transitionRate(1,2) = q12

transitionRate(2,1) = q21

}

- markov3b_project (data = ‘markov3b_data.txt’, model = ‘markov3b_model.txt’)

Time varying transition rates are used in this example.